Tech giants are spearheading a major strategic shift by crafting custom AI chips for their cloud services. This move is in response to the burgeoning AI market, set to expand from $17 billion in 2022 to an impressive $227 billion by 2032. Companies including Microsoft, Amazon, and Google are entering hardware development, not to sell physical chips, but to embed them within their cloud offerings.

With the ‘Wintel’ PC era, hardware standardisation made it harder for firms to stand out. Cloud computing, however, has changed the playing field again, initially driven by software competition, now pivoting towards AI and the need for high-performance, specialized chips. Thus, tech behemoths are crafting their own silicon, purpose-built for cloud AI services.

From mainstream to exclusive

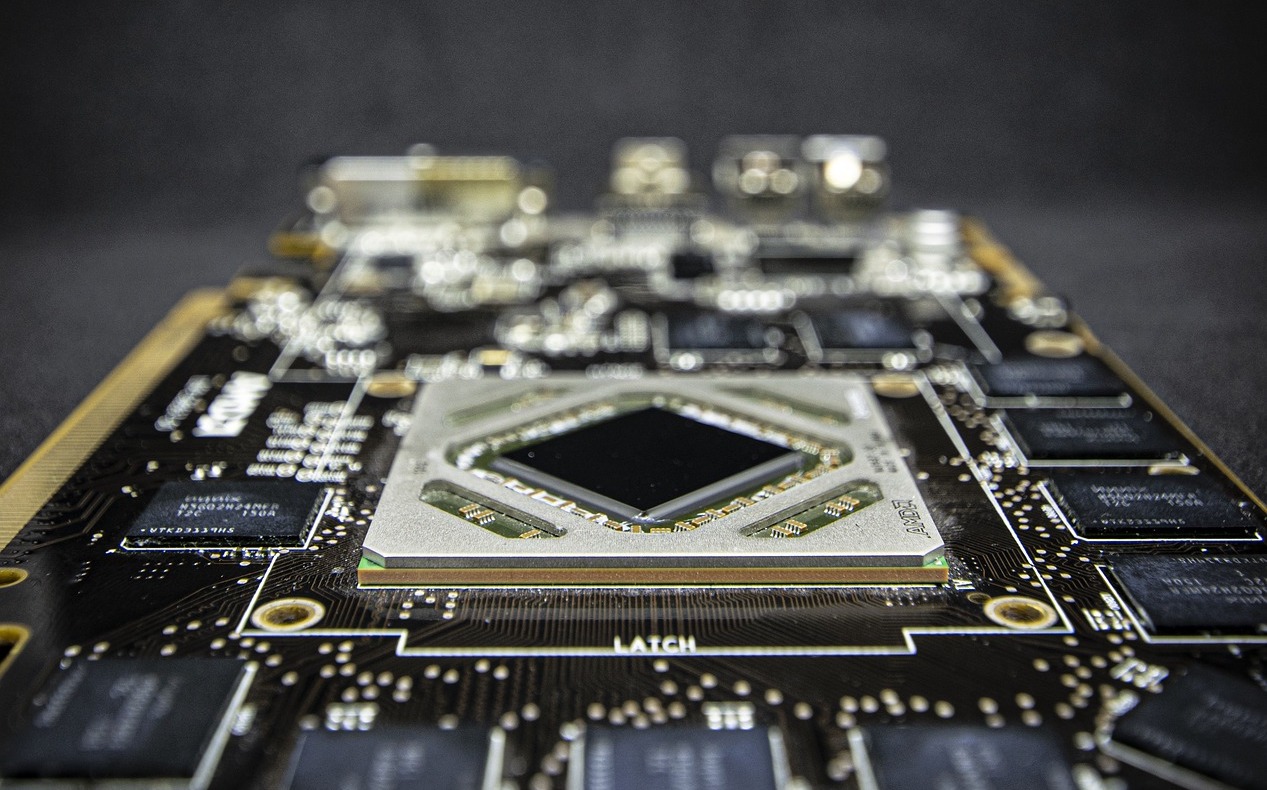

Originally customers bought hardware for their compute needs. This hardware was made by a myriad of vendors, which in turn bought their components from a select group of suppliers. For a long time, the main component of a computer was the CPU, and the main supplier of CPUs was Intel. Now much has changed. Customers use cloud computing. Hardware and software are provided as a service, HaaS and SaaS. At first, this was done with the same hardware customers used to buy. Then Google and others began buying the components and building computers tailored to their data centres and their way of working. And now companies mainly known for software and services are developing their own chips. Not CPUs, but powerful AI chips.

As reported by The Verge, Microsoft is leveraging this trend with Azure Maia and Azure Cobalt. By developing custom chips, Microsoft aims to reduce dependence on firms like Nvidia and enhance its cloud AI offerings.

The competitive edge of custom silicon

Unlike sequential Central Processing Units (CPUs), custom AI chips excel with their parallel processing - a boon for AI workloads. Tailored features like more cores, vector and tensor units, and superior memory set these chips apart. Their introduction is central to market growth, with companies like OpenAI contemplating their own AI chips, potentially increasing competition among tech powerhouses. Nvidia currently leads with its data centre market share, while AMD keeps pace with fresh AI accelerators and business alliances.

Challenges for smaller players

Smaller companies face hurdles in this new model. While traditional hardware can still be sourced from Nvidia and AMD, the pursuit of custom hardware creates barriers and differentiation, which can stifle smaller competitors.

Smaller firms and start-ups are carving out niches within the AI ecosystem, offering specialized services where larger companies haven’t yet cemented their presence. They might target medium-sized businesses or specific sectors. This flexibility is crucial for keeping competition alive and promoting industry-wide innovation.

Looking ahead: sustainability and security

While tech giants expand their AI chip development, concerns remain that the pivot to service-based models could decelerate cutting-edge advancements. Companies must reconcile the drive for innovation with sustainability imperatives. Complex challenges in data security arise with hardware-service integration, necessitating robust mitigation strategies and engendering customer trust through transparency and accountability. As the shift to SaaS is already underway, some of these challenges are already mitigated by established legal frameworks around data ownership and security.

Conclusion

A seismic shift is taking place in hardware development, with AI chips at the helm of transformation. Technology giants deploy proprietary silicon to power cloud services and secure market advantages. Amidst evolving industry norms, smaller entities must find their unique offering and innovate within the new market framework. Security and innovation will require careful strategic handling by all industry players.